Hello World

Learn why we're building pi_optimal. From experiencing the limitations of Reinforcement Learning in real-world applications to realizing that this powerful technology remains locked behind big tech's walls. Discover our journey and mission to democratize AI, making superhuman automation accessible to companies of all sizes – because game-changing technology shouldn't be limited to tech giants.

Why We're Democratizing Reinforcement Learning

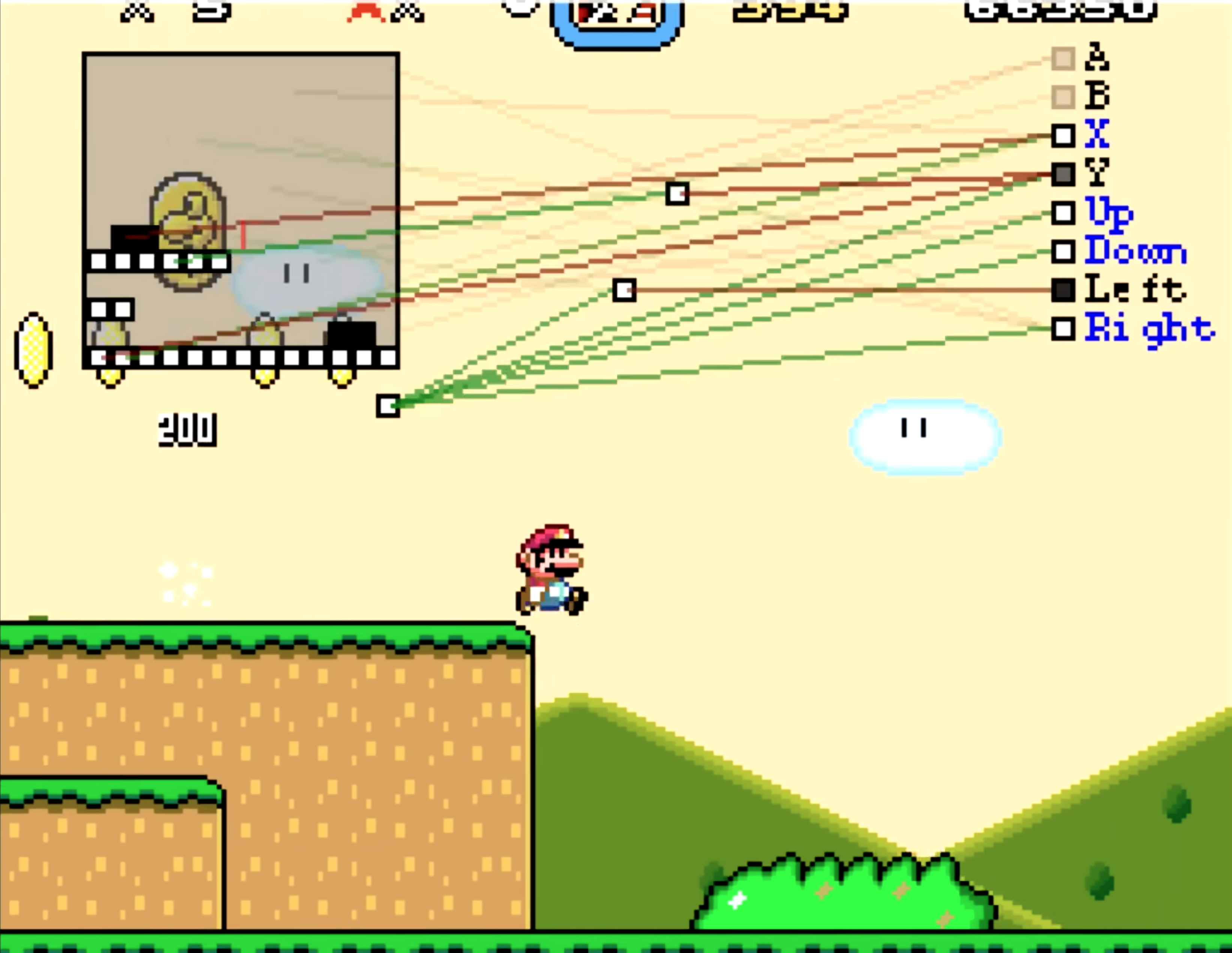

Eight years ago, I stumbled upon this YouTube video that would change the trajectory of my career. On my screen, an AI was teaching itself to play Super Mario Bros. With each attempt, it failed, learned, and improved – until finally, it mastered the level. No predefined rules or explicit instructions – just pure learning through interaction. As a business and psychology student at the time, I was mesmerized by this display of artificial intelligence teaching itself through trial and error.

Source: SethBling

What I didn't know then was that I was watching Reinforcement Learning (RL) in action, a technology that would become my passion and eventually lead to creation pi_optimal. This early exposure to RL's potential sparked something in me, compelling me to pivot my academic focus toward artificial intelligence during my master's studies.

The Promise of Reinforcement Learning

The timing couldn't have been better. DeepMind was making headlines with their DQN algorithms, demonstrating that a single model could master multiple Atari games. The idea that AI could learn from experience, much like humans do, wasn't just fascinating – it felt like the future of computing.

However, the gap between academic promise and business reality soon became apparent. While working as a data scientist at a marketing agency, I encountered a perfect use case for RL: sequential decision-making in campaign management. Campaign managers constantly monitored digital campaigns throughout the day, making adjustments to ensure they achieved specific goals, such as maximizing conversions while staying within budget. The process was repetitive, time-consuming, and prone to human error. It seemed intuitive that an RL algorithm could automate these adjustments and alleviate the workload. But there was a catch. Traditional RL approaches required thousands of failed attempts before achieving success – a luxury no business could afford. We couldn't tell our clients, "Just wait through 100,000 failed campaigns, and then the AI will be brilliant!"

The Reality Check

This challenge wasn't unique to us. At data science conferences, I'd meet other data scientist who shared similar stories: they saw RL's potential, attempted implementation, but couldn't make it work in practice. The technology that seemed so promising in research papers felt frustratingly out of reach in the real world.

We faced three major hurdles:

- Handling Different Types of Decisions: Our optimization required managing multiple types of decisions at once. For example, we needed to decide which audience whitelist to target (a categorical choice), adjust the cost-per-thousand impressions (CPM) bid amount (a continuous variable), and determine whether to pause or continue a campaign (a binary decision). Most RL algorithms weren’t designed to handle these diverse requirements simultaneously.

- The Risks of Live Experimentation: Traditional RL often learns by trial and error, which wasn’t practical in a live setting. We couldn’t risk experimenting directly on active campaigns—it was too costly and unpredictable.

- Limited Tools for Pre-Trained Models: Offline RL, where algorithms learn from existing data instead of live trials, was still in its infancy. There weren’t many tools or best practices available to bridge the gap between research and production.

Building Our Own Path

Realizing the potential of this approach, we cautiously embarked on the journey from prototype to production. It was anything but straightforward. Debugging RL algorithms often felt like stumbling in the dark—policies that seemed promising in simulations would behave unpredictably in live environments, leaving us questioning what we had missed. We ran countless experiments, not just to improve performance, but also to gain a deeper understanding of why the model behaved the way it did. Each small breakthrough came with new challenges, requiring patience and persistence.

Scaling the solution added another layer of complexity. We had to build infrastructure that could support large-scale simulations for training while also enabling reliable real-time inference in production. With limited resources, we relied heavily on cloud-based systems to balance speed, scalability, and cost-effectiveness. At times, progress felt painstakingly slow, but each obstacle taught us valuable lessons about bridging the gap between research and practical application.

But the results? They were worth every sleepless night. We witnessed our AI achieving superhuman performance in campaign management, delivering automation rates as well as performance KPIs that seemed impossible just months earlier.

The Vision Behind pi_optimal

Yet this success story highlighted a troubling reality in the AI landscape. While tech giants have teams of RL researchers and vast resources to experiment, most companies can't afford the luxury of extended trial-and-error periods or specialized expertise. This technology gap isn't just unfair – it's holding back innovation across industries.

This realization became the driving force behind pi_optimal. We've lived through the challenges of implementing RL in the real world, and we believe this technology should be accessible to everyone, not just big tech. Our mission is to democratize reinforcement learning, making it practical and accessible for companies and organizations of all sizes.

But we also know this isn’t something we can achieve alone. The power of open source lies in the community. It’s through collaboration, shared ideas, and collective effort that real change happens. That’s why we’re excited to announce that we’ll be open-sourcing pi_optimal in Q1 2025. We want to empower developers, researchers, and organizations to not only use RL but to shape its future with us.

We’re inviting you to join us on this journey. Whether you’re curious about reinforcement learning, already an expert, or just want to explore what’s possible, there’s a place for you in the pi_optimal community. Sign up for early access to our preview and join our Slack community, where we’re exchanging ideas, solving problems, and building the next generation of RL tools—together.

Published on December 20, 2024 by Jochen Luithardt