Facebook Optimization with RL

In the fast-paced world of digital advertising, platforms like Meta dominate, generating over $100 billion in revenue as of 2024 [1]. For digital marketing agencies navigating Meta’s advertising ecosystem poses unique challenges. Meeting specific campaign goals such as impressions within a constrained budget often requires continuous monitoring and adjustment, making the process resource-intensive and prone to error.

Problem Statement

Unlike traditional advertising channels, where metrics such as impressions can be controlled directly, Meta’s platform relies on a performance-driven model influenced by budget allocation. Achieving specific goals — whether impressions or conversions — within budget constraints necessitates constant oversight. This manual process is labor-intensive and prone to inefficiencies.

pi_optimal addresses these challenges by leveraging Reinforcement Learning (RL) to automate and optimize advertising campaign management. Designed for data science teams—even those unfamiliar with advanced RL concepts — pi_optimal enables efficient control over campaigns to achieve consistent outcomes.

Our goal is twofold:

- Develop a model that optimally configures Facebook campaign settings to meet target impressions.

- Understand the model’s decision-making process to build trust in its predictions.

Dataset

The dataset, provided by a mid-sized German marketing agency, consists of over 2,574 Facebook campaigns conducted between 2019 and 2022. Campaign data is aggregated daily, with the following key features:

Dataset Features

campaign_id: Unique identifier for each campaigndate: Date of the data pointday_of_week: Day of the weekimpression_goal: The target number of impressions for the campaign by the end of the runtimedaily_impressions: The number of impressions generated by the campaign on that daytotal_impressions: The total number of impressions generated until that daytarget_daily_impressions: The target number of impressions for that daytarget_total_impressions: The target number of impressions until that daymissing_impressions: The difference between the target and actual impressions for that daytotal_days: The total number of days the campaign has been runningremaining_days: The number of days remaining for the campaign to runset_cpm: The cost per thousand impressions set for the campaignfeed_facebook_status: Indicated if the campaign was run on the Facebook feed at that dayinstant_article_facebook_status: Indicated if the campaign was run on the Instant Article feed at that dayfacebook_stories_facebook_status: Indicated if the campaign was run on the Facebook Stories feed at that daymarketplace_facebook_status: Indicated if the campaign was run on the Marketplace feed at that dayright_hand_column_facebook_status: Indicated if the campaign was run on the Right Hand Column feed at that day

The dataset provides a comprehensive view of campaign performance, enabling us to train and evaluate our RL agent.

import pandas as pd

df_historical_fb_camapigns = pd.read_csv('data/fb_history.csv', parse_dates=['date'])

df_historical_fb_camapigns.sort_values(['campaign_id', 'date'], inplace=True)

df_historical_fb_camapigns.head()

| campaign_id | date | day_of_week | impression_goal | daily_impressions | total_impressions | target_daily_impressions | target_total_impressions | missing_impressions | total_days | remaining_days | set_cpm | feed_facebook_status | instant_article_facebook_status | facebook_stories_facebook_status | marketplace_facebook_status | right_hand_column_facebook_status | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 2022-05-30 | Monday | 21000 | 1244.0 | 1244.0 | 1400.0 | 1400.0 | 156.0 | 15.0 | 13.0 | 3.0 | Active | Active | Active | Active | Inactive |

| 1 | 0 | 2022-06-01 | Wednesday | 21000 | 915.0 | 2159.0 | 1400.0 | 2800.0 | 641.0 | 15.0 | 11.0 | 3.0 | Active | Active | Active | Active | Inactive |

| 2 | 0 | 2022-06-08 | Wednesday | 21000 | 5225.0 | 7384.0 | 1400.0 | 4200.0 | -3184.0 | 15.0 | 4.0 | 3.0 | Active | Active | Active | Active | Active |

| 3 | 0 | 2022-06-09 | Thursday | 21000 | 4402.0 | 11786.0 | 1400.0 | 5600.0 | -6186.0 | 15.0 | 3.0 | 3.0 | Active | Active | Active | Active | Active |

| 4 | 0 | 2022-06-10 | Friday | 21000 | 3202.0 | 14988.0 | 1400.0 | 7000.0 | -7988.0 | 15.0 | 2.0 | 2.0 | Active | Active | Active | Active | Active |

Speeding up Training and Inference

To speed up training and inference, you can use the sklearnex package, which provides optimized implementations of certain scikit-learn functions for Intel CPUs. To enable this optimization, you need to install the sklearnex package and uncomment the following lines of code:

```python

# Uncomment the following lines for faster training and inference if you have sklearnex installed and are using an Intel CPU

#import numpy as np

#from sklearnex import patch_sklearn

#patch_sklearn()

Defining the Reward Function

To guide the RL model’s learning, we need to define a reward function. This function evaluates the quality of a given campaign state. The RL model then optimizes its decisions to maximize the reward.

In our case, the reward function minimizes the absolute difference between the target impressions and actual impressions (i.e., reducing over- or under-delivery). While our example reward function is straightforward, it can be extended to account for additional factors such as budget utilization or audience targeting.

Implementation

# Function to calculate reward

def calculate_reward(row, epsilon=1e-8):

# Reward is the negative of the absolute difference between target and actual impressions

reward = -abs(row.daily_impressions - row.target_daily_impressions)

return reward

Apply the reward function to the dataset

# Apply the reward calculation

df_historical_fb_camapigns['reward'] = df_historical_fb_camapigns.apply(calculate_reward, axis=1)

df_historical_fb_camapigns.head()

| campaign_id | date | day_of_week | impression_goal | daily_impressions | total_impressions | target_daily_impressions | target_total_impressions | missing_impressions | total_days | remaining_days | set_cpm | feed_facebook_status | instant_article_facebook_status | facebook_stories_facebook_status | marketplace_facebook_status | right_hand_column_facebook_status | reward | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 2022-05-30 | Monday | 21000 | 1244.0 | 1244.0 | 1400.0 | 1400.0 | 156.0 | 15.0 | 13.0 | 3.0 | Active | Active | Active | Active | Inactive | -156.0 |

| 1 | 0 | 2022-06-01 | Wednesday | 21000 | 915.0 | 2159.0 | 1400.0 | 2800.0 | 641.0 | 15.0 | 11.0 | 3.0 | Active | Active | Active | Active | Inactive | -641.0 |

| 2 | 0 | 2022-06-08 | Wednesday | 21000 | 5225.0 | 7384.0 | 1400.0 | 4200.0 | -3184.0 | 15.0 | 4.0 | 3.0 | Active | Active | Active | Active | Active | -3184.0 |

| 3 | 0 | 2022-06-09 | Thursday | 21000 | 4402.0 | 11786.0 | 1400.0 | 5600.0 | -6186.0 | 15.0 | 3.0 | 3.0 | Active | Active | Active | Active | Active | -6186.0 |

| 4 | 0 | 2022-06-10 | Friday | 21000 | 3202.0 | 14988.0 | 1400.0 | 7000.0 | -7988.0 | 15.0 | 2.0 | 2.0 | Active | Active | Active | Active | Active | -7988.0 |

Dataset Preparation

To train the RL agent, we prepare the dataset by specifying:

-

State columns:

Variables describing the campaign’s current environment (e.g.,daily_impressions,day_of_week, etc.). -

Action columns:

Variables representing the campaign settings that can be controlled (e.g.,set_cpm,feed_facebook_status). -

Reward Column:

The reward function output, indicating the quality of a decision. -

Unit and time columns:

Identifiers for each campaign and the temporal sequence of data points.

Example Configuration

state_cols = [

'daily_impressions', 'day_of_week', 'impression_goal',

'total_impressions', 'target_daily_impressions', 'missing_impressions',

]

action_cols = [

'set_cpm', 'feed_facebook_status', 'instant_article_facebook_status',

'facebook_stories_facebook_status', 'marketplace_facebook_status', 'right_hand_column_facebook_status',

]

reward_col = 'reward'

timestamp_col = 'date'

unit_col = 'campaign_id'

Configuration

Beides the columns mentioned above, we could also adjust the number of lookback timesteps for predicting how the campaign will evolve in the future. In our case we consider the last 7 days of data to make a decision. Now the dataset will be initialized, the features will be preprocessed and the dataset will be prepared for training.

import pi_optimal as po

LOOKBACK_TIMESTEPS = 7

historical_dataset = po.datasets.timeseries_dataset.TimeseriesDataset(df=df_historical_fb_camapigns,

state_columns=state_cols,

action_columns=action_cols,

reward_column=reward_col,

timestep_column=timestamp_col,

unit_index=unit_col,

lookback_timesteps=LOOKBACK_TIMESTEPS)

Agent Initialization and Training

Using pi_optimal, we initialize and train an RL agent to optimize campaign performance. Constraints are added to ensure feasible and cost-effective recommendations, such as limiting CPM values or activation of placements.

from pi_optimal.agents.agent import Agent

import numpy as np

agent = Agent()

agent.train(dataset=historical_dataset,

constraints= {

'min': np.array([0.1, "Active", "Active", "Active", "Active", "Active"]), # Min values for: CPM, Feed, Instant Article, Stories, Marketplace, Right Hand Column

'max': np.array([9.0, "Inactive", "Inactive", "Inactive", "Inactive", "Inactive"]) # Max values for: CPM, Feed, Instant Article, Stories, Marketplace, Right Hand Column

})

Evaluation and Action Prediction

The trained RL agent is evaluated using unseen campaign data. For example, we select an underperforming campaign and analyze the agent’s recommendations for the last seven days.

Key Steps:

- Extract recent campaign data.

- Create an inference dataset using the same configuration as the training set.

- Use the agent to predict optimal actions.

Load Current Data

We select here two campaigns which were not part of the training set. One campiagn is underperforming, so the agent should increase the CPM. The other campaign is overperforming, so the agent should decrease the CPM. We will start with the underperforming campaign.

# Load the current adset control data

# df_current_adset_control = pd.read_csv('data/fb_current_over_deliver.csv', parse_dates=['date'])

df_current_adset_control = pd.read_csv('data/fb_current_under_deliver.csv', parse_dates=['date'])

# Apply the reward calculation

df_current_adset_control["reward"] = df_current_adset_control.apply(calculate_reward, axis=1)

from util_plot import plot_campaign

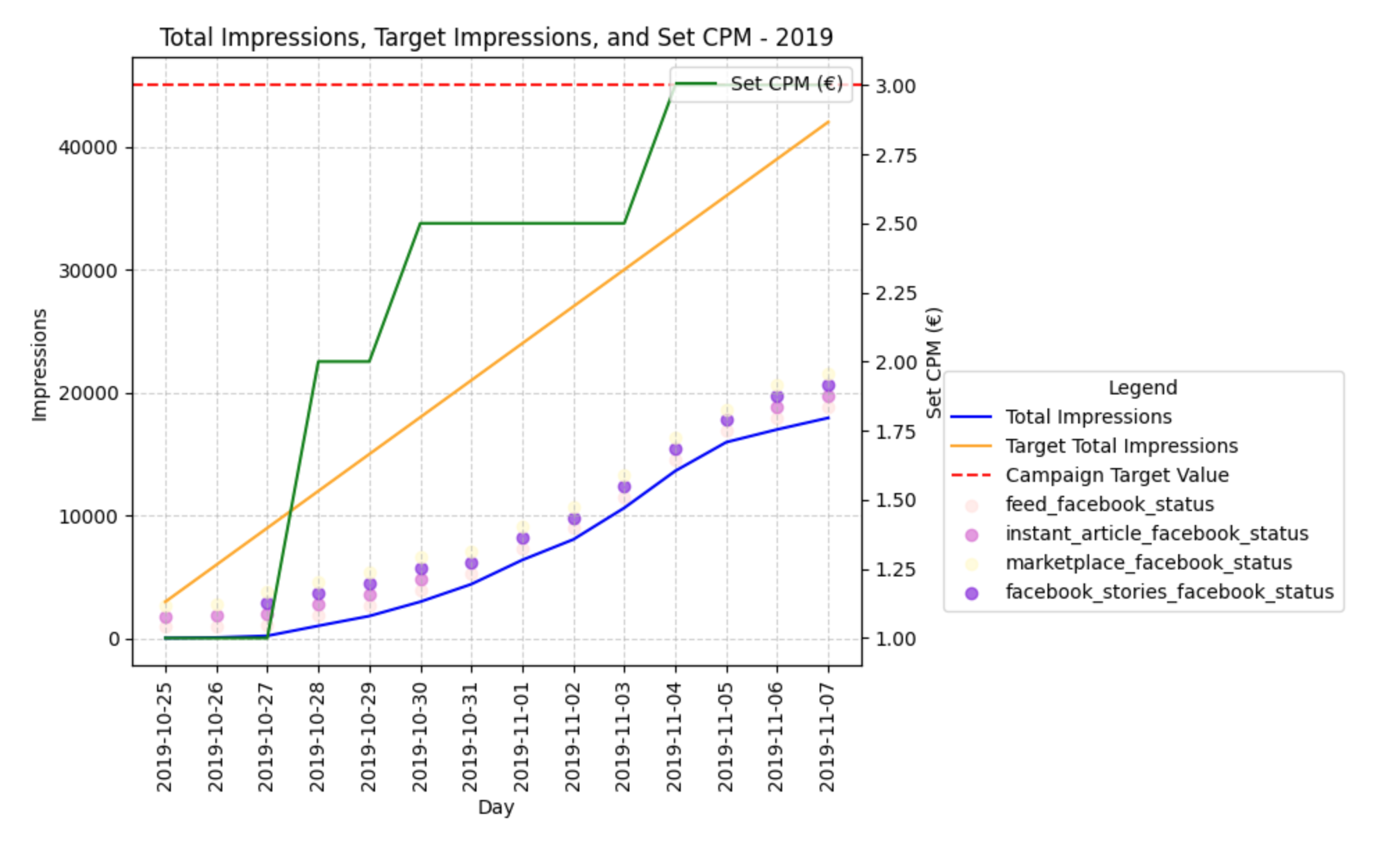

plot_campaign(df_current_adset_control.copy())

Create Current Dataset

For our dataset we would like what happen if we would let the agent make decisions for the last 7 days. After we cutout the last 7 days of the campaign we can now create a dataset for the agent to predict the optimal actions.

df_current_adset_control = df_current_adset_control.iloc[:-7]

current_dataset = po.datasets.timeseries_dataset.TimeseriesDataset(df=df_current_adset_control,

dataset_config=historical_dataset.dataset_config,

lookback_timesteps=LOOKBACK_TIMESTEPS,

train_processors=False,

is_inference=True)

Predict Optimal Actions

best_actions = agent.predict(current_dataset)

Interpreting the Results

The agent provides a sequence of optimal actions for the time horizon. Here we print them:

for i in range(len(best_actions)):

print(f"Timestep {i}:")

print("Maximum CPM:", best_actions[i][0])

print("Feed Facebook Status:", best_actions[i][1])

print("Instant Article Facebook Status:", best_actions[i][2])

print("Facebook Stories Facebook Status:", best_actions[i][3])

print("Marketplace Facebook Status:", best_actions[i][4])

print("Right Hand Column Facebook Status:", best_actions[i][5])

print()

print("--------------------")

print()

Timestep 0:

Maximum CPM: 4.1440664897204105

Feed Facebook Status: Inactive

Instant Article Facebook Status: Inactive

Facebook Stories Facebook Status: Active

Marketplace Facebook Status: Inactive

Right Hand Column Facebook Status: Active

--------------------

Timestep 1:

Maximum CPM: 5.8248877751997234

Feed Facebook Status: Inactive

Instant Article Facebook Status: Inactive

Facebook Stories Facebook Status: Active

Marketplace Facebook Status: Active

Right Hand Column Facebook Status: Active

--------------------

Timestep 2:

Maximum CPM: 5.629406419293266

Feed Facebook Status: Active

Instant Article Facebook Status: Inactive

Facebook Stories Facebook Status: Active

Marketplace Facebook Status: Active

Right Hand Column Facebook Status: Active

--------------------

Timestep 3:

Maximum CPM: 7.144393154823279

Feed Facebook Status: Inactive

Instant Article Facebook Status: Active

Facebook Stories Facebook Status: Inactive

Marketplace Facebook Status: Inactive

Right Hand Column Facebook Status: Active

--------------------

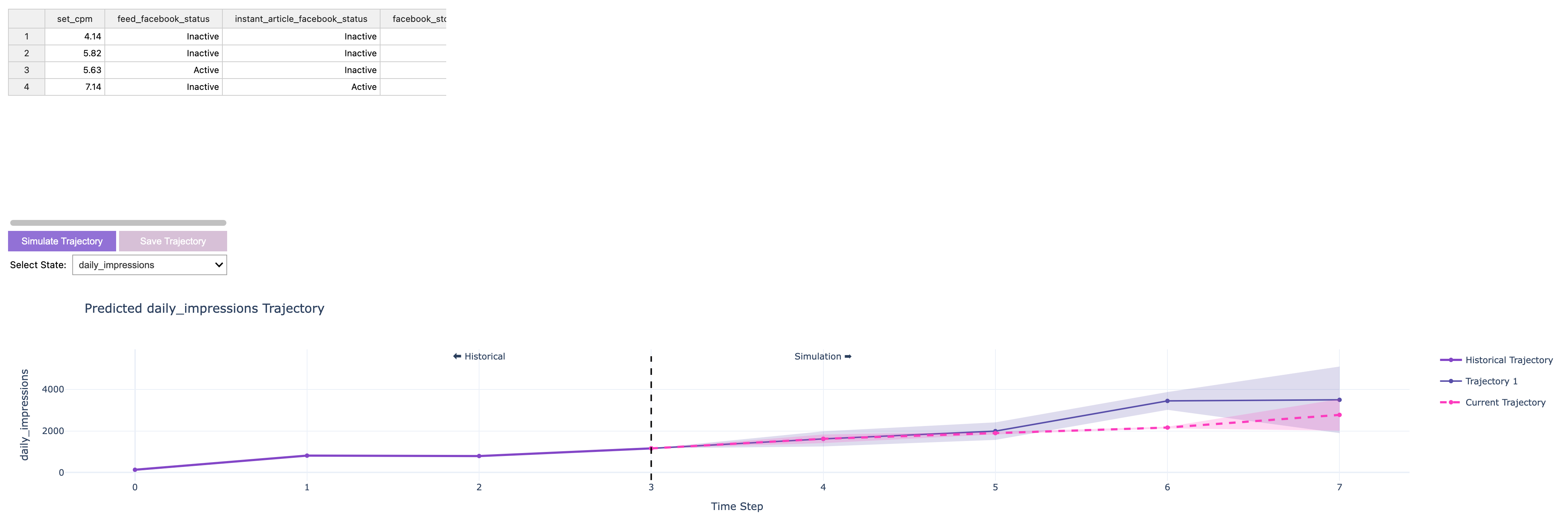

Visualization of Scenarios

pi_optimal provides visualization tools to analyze the agent’s recommendations. For instance, we can compare the agent’s suggested CPM values and placement statuses with actual performance, ensuring transparency and interpretability.

from pi_optimal.utils.trajectory_visualizer import TrajectoryVisualizer

trajectory_visualizer = TrajectoryVisualizer(agent, current_dataset, best_actions=best_actions)

trajectory_visualizer.display()

Conclusion

This notebook demonstrates how pi_optimal could be use to automate the control of facebook campaigns to reach a specific impressions goal. Additional we have seen how we could use pi_optimal to run hypothetical scenarios. This could be used to understand how the agent would react to different settings or to understand how the agent would react to different scenarios.

Key Highlights

- Dataset Preparation:

The dataset was prepared for training by defining the key parameters required for the RL agent. There is no need to manually preprocess the data, as the

pi_optimalpackage streamlines this process. - Agent Training: The RL agent was trained on the dataset, learning to optimize the campaign settings to reach the desired impressions goal.

- Action Prediction: The agent predicted the optimal actions for two campaigns, one underperforming and one overperforming, to reach the desired impressions goal.

- Simulation Visualization: The results of the agent were visualized to understand how the agent would react to different scenarios.

Next Steps

-

Enhance Reward Function: Use custom reward functions that incorporate additional features, such as budget constraints, audience targeting, or creative performance. By designing more complex reward functions, you can guide the agent to make decisions that align with broader campaign objectives.

-

Hyperparameter Tuning: Optimize the hyperparameters of the RL agent to improve its performance. By conducting a hyperparameter search, you can identify the optimal configuration that maximizes the agent's ability to reach the impressions goal.

-

Real-Time Decision-Making: Implement the agent in a real-time decision-making system to automate the optimization of Facebook campaigns. By integrating the agent into your existing ad management platform, you can leverage its capabilities to enhance campaign performance and efficiency.